Getting Stronger with AI Powered — The Institute of Media Environment’s View on CES 2025

Introduction

CES (Consumer Electronics Show) is one of the world’s largest consumer electronics and IT trade fairs, held every year in Las Vegas. Companies worldwide showcase their latest technologies and products, offering a glimpse into the future of everyday life. The Institute of Media Environmentcovers CES every year, focusing on not simply the “latest devices and products” themselves, but rather the context — what kind of future lifestyles can be envisioned from the wide range of devices, products, and concepts unveiled.

So, what new context emerged from CES 2025? We would like to share our findings with you again this year.

Japanese Companies Shone

At CES 2025, the rebound in the number of Chinese exhibitors, the continued progress of South Korean companies, and the strong performance of Japanese companies were particularly noticeable. Toyota returned to the press conferences for the first time in five years, and Panasonic made headlines by appearing at the keynote speech on the first day of the event.

In addition, four Japanese-related products received the “Best of Innovation” awards announced by the organizers, including Kubota’s autonomous agricultural vehicle and the power-assisted prosthetic leg developed by BionicM. Considering that only 34 “Best of Innovation” awards were given and that Japanese-related companies typically received only one or two in previous years, their achievements stood out.

Japanese-related booths also attracted more visitors than in previous years and were buzzing with activity. Many of the technologies presented had the potential to actually transform daily life, healthcare, and business, drawing interest from stakeholders from various countries.

The Beginning of AI in Everyday Life

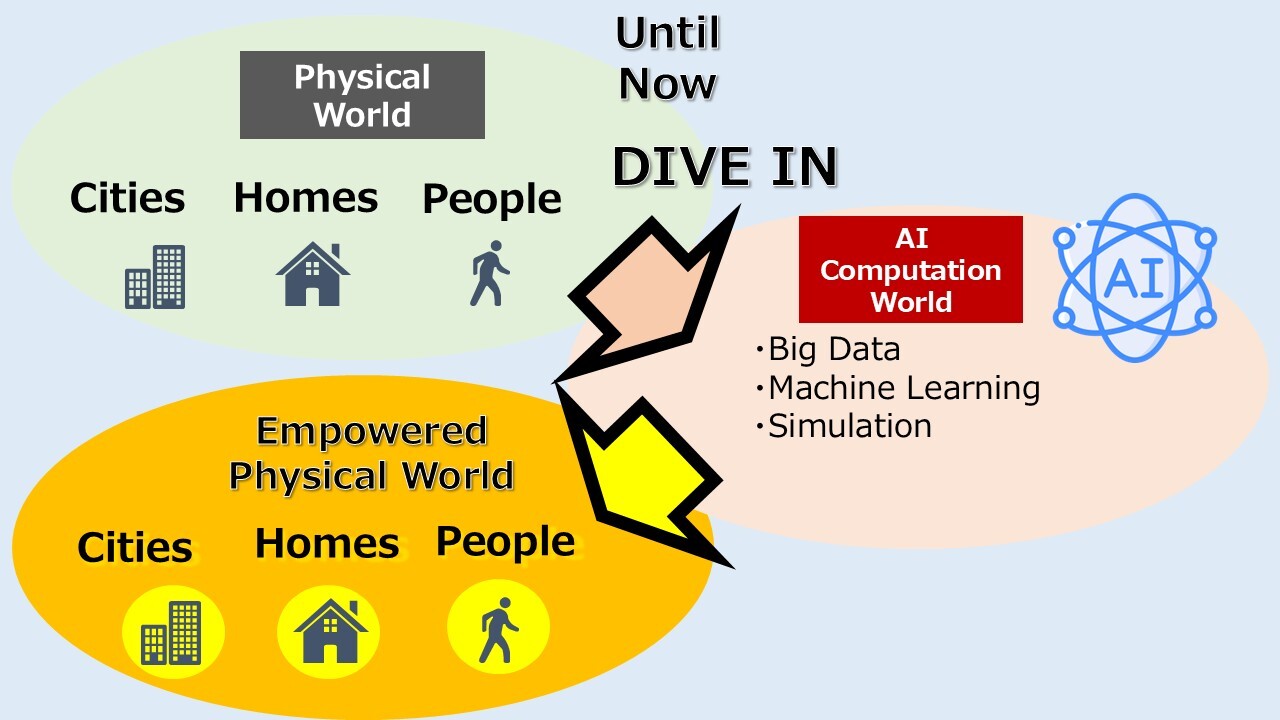

At CES 2025, the overall theme was “DIVE IN”, presenting a vision of the future in which AI technologies envelop cities, homes, and people, empowering various aspects of daily life. In other words, all points of daily interaction are set to “DIVE IN” to AI technology and transform. We can say that this vision of the future was clearly showcased.

So, what specific changes are about to take place? Let’s explore this by looking at some of the most notable announcements.

Transforming Cities

Toyota returned to CES for the first time in five years to present the progress of its Woven City concept. Woven City is a research city designed to create human-centered technologies, expand mobility, and cultivate happiness at scale. Residency is set to begin in the fall of 2025, with various pilot experiments planned.

In Woven City, AI-driven video analysis, known as Vision AI, will be used to closely monitor human movements and conditions, supporting a wide range of services and safety management. For example, technologies are being tested to automatically detect people who have fallen and guide them to safety, as well as the development of robots that fold laundry using machine learning. Various other service experiments are also planned.

Homes Watch Over People, Delivering Comfort, Safety, and Health

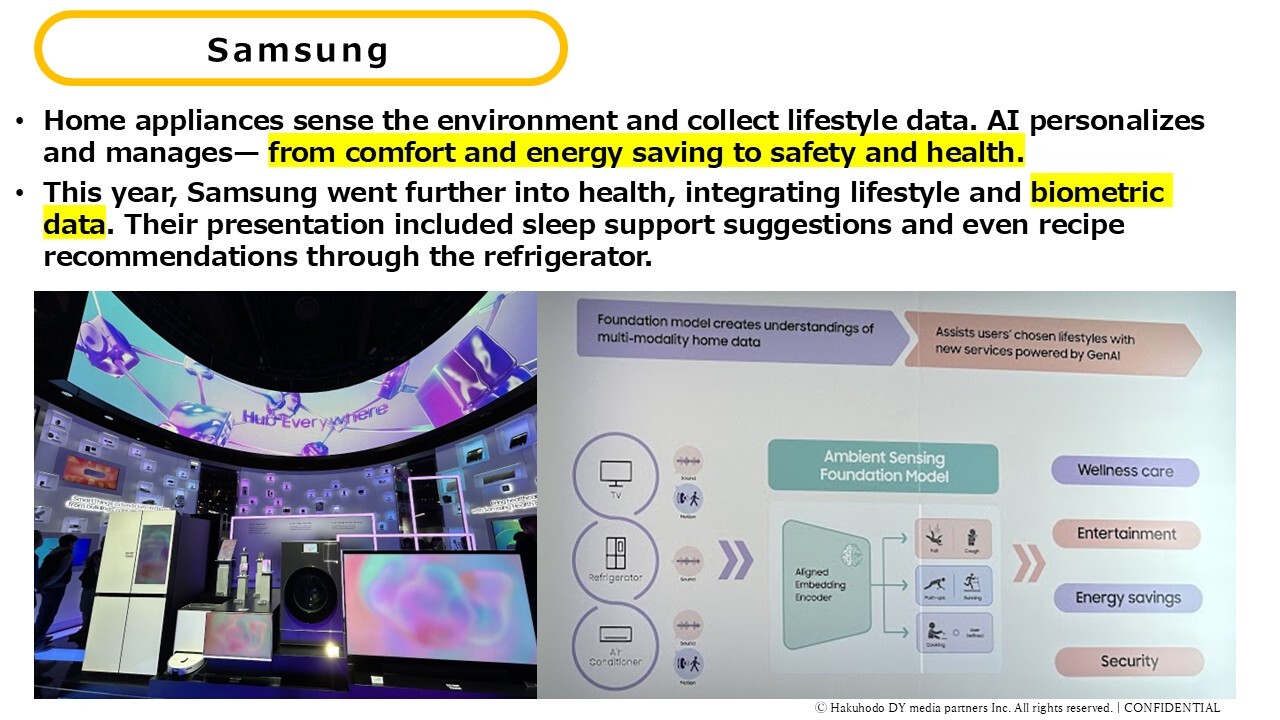

Home appliance manufacturers such as Samsung and LG have been continuously proposing ways for homes to “watch over” residents and provide various forms of value. Both companies have previously showcased visions of AI-connected smart homes, where appliances anticipate needs such as adjusting room temperature or turning off lights. This year, it seems they are starting preparations for full-scale social implementation.

Samsung is promoting ambient sensing, where home appliances collect data by sensing residents’ activities. Televisions, refrigerators, air conditioners, and other devices monitor household movements and sounds, using the collected data to personalize and enhance daily life. A major development this year is the expansion into health management. Demonstrations included healthcare services integrating biometric data from smartwatches with daily activity data such as sleep support and recipe suggestions through refrigerators.

For example, by combining daily exercise data with biometric information, a refrigerator could suggest, “Based on your current activity level and condition, it would be best to eat this chicken and cabbage inside the fridge.” This goes beyond simply recommending recipes based on ingredients and represents an advanced level of personalization made possible by integrating lifestyle and biometric data.

However, lifestyle and biometric data, like those mentioned above, are highly private, and some people may feel uneasy about sharing this information with companies. To address this, Samsung highlighted its security system, Knox, prominently in press conferences and exhibitions, proposing a safe and secure AI-connected system designed for real-world implementation. This strategy suggests that Samsung aims to gain a competitive edge in the AI smart home market while collecting data from living spaces, all based on trust and reliability.

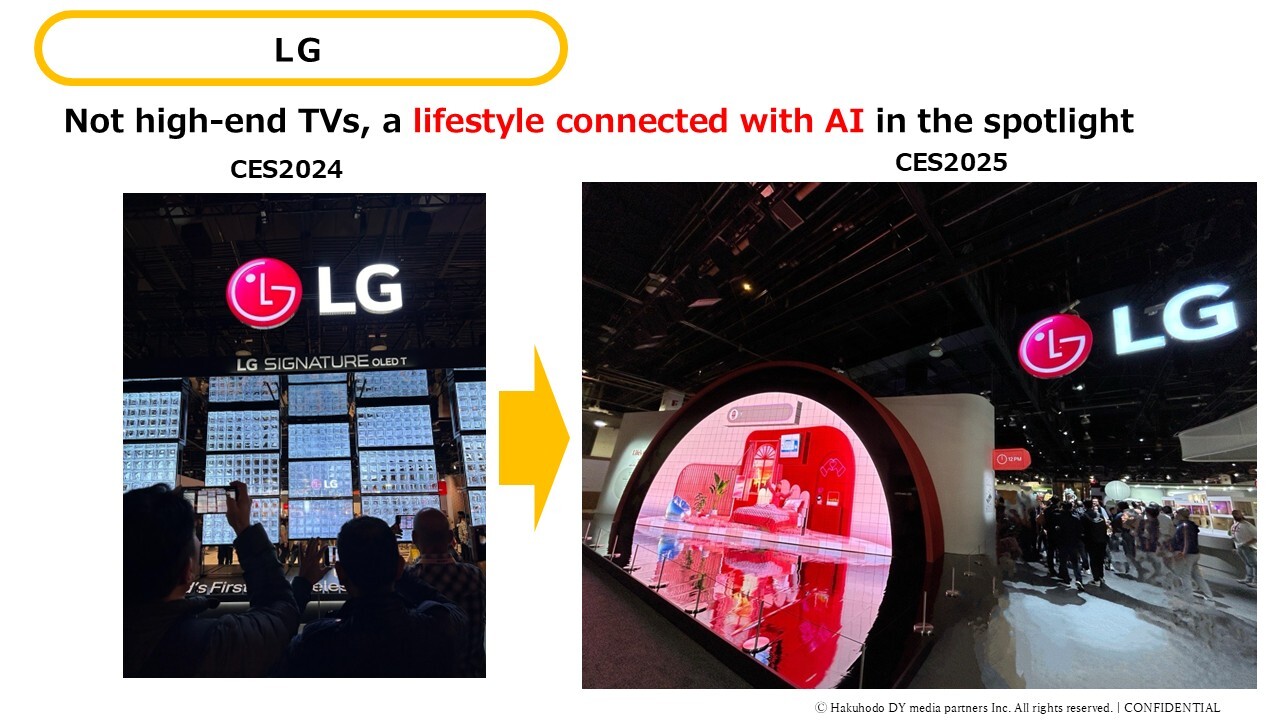

Meanwhile, LG’s approach to its exhibition changed significantly from previous years. In the past, the focus was on the evolution of TVs, such as transparent high-definition displays, but this year, LG emphasized comfortable and healthy living connected with AI. This clearly demonstrates LG’s serious commitment to the real-world implementation of AI smart homes.

LG acquired the Dutch smart home platform company Athom last year. Athom has a platform that can connect with more than 1,000 apps and over 50,000 devices, including Philips Hue and IKEA smart home products. With this acquisition, LG greatly expanded connectivity not only with its own products but also with those of other companies.

LG then released APIs for developing apps and services that can make full use of this wide range of connected appliances. By building an open ecosystem, LG aims to enable many companies to develop services and applications that use LG AI in the future. This is a strategic move to acquire “spatial data,” which lies beyond the AI smart home competition. Living spaces where people live and act are full of valuable data. By opening up its system and letting many companies use it, LG can obtain a massive amount of living space data. From this, we can clearly see LG’s strategy of becoming a “spatial AI platform provider.”

Is the Competition for Living Space Data Starting?

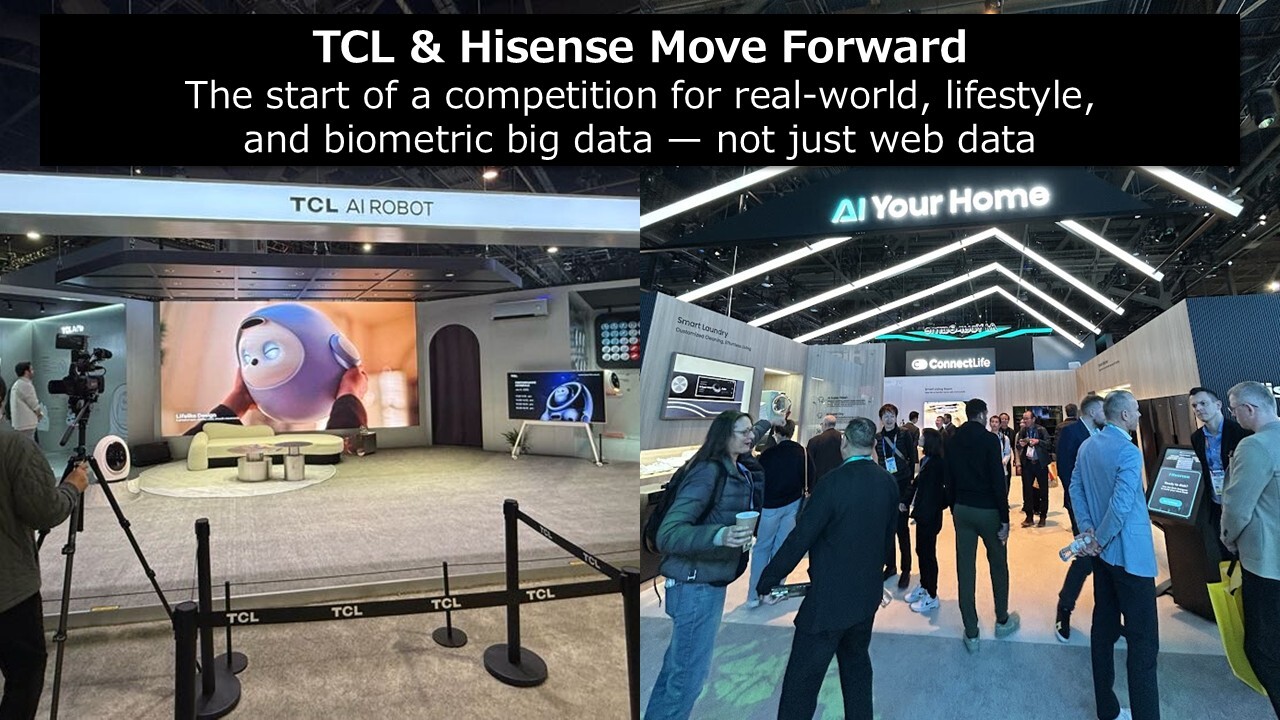

What highlighted the potential for AI integration into our living spaces was the movement of Chinese home appliance makers such as TCL and Hisense.

In the past, both companies mainly focused their CES exhibits on large, high-quality TVs. This year, however, their showcases featured “AI-connected living.” TCL, for the first time, unveiled an AI companion robot. With its cute appearance, the robot is designed not only to play with children and record memories, but also to watch over the home and collect data. Hisense, on the other hand, promoted the slogan “AI Your Home” and introduced its ConnectLife platform, which connects and controls all smart home appliances, offering a vision of more comfortable living.

Meanwhile, in recent interviews with AI researchers, The Institute of Media Environment heard concerns about the shortage of information available in online spaces for AI to learn from. Samsung’s push for ambient sensing, LG’s clear commitment to building a spatial AI platform, and the entry of major Chinese players into the AI smart home market all suggest a new trend. Now that AI has largely absorbed the information available on the web, these moves signal the start of a “competition for living space data,” with home appliance makers—which have a unique advantage in accessing consumer behavior data—leading the way.

Mobility That Senses People

As EVs and autonomous driving advance, in mobility that connects homes and cities, technologies that differentiate the in-car experience have attracted attention. This time, Honda unveiled the prototypes of the Honda 0 SALOON and Honda 0 SUV for the first time worldwide. The Honda 0 Series senses various aspects of the occupants’ conditions, emotions, and conversations inside the car. Using this data, AI can not only adjust the temperature but also understand personal preferences such as color and music to create a comfortable in-car environment.

Honda also announced ASIMO OS as the foundation for delivering this comfortable in-car experience. ASIMO OS is designed to integrally manage driving control and UI/UX, enabling both advanced autonomous driving functions and a comfortable in-car environment. To deliver this integrated experience, the system is expected to be equipped with cutting-edge 3-nanometer semiconductors, jointly developed by Honda and Renesas Electronics.

The concept of cars sensing occupants and AI delivering a personalized, comfortable experience is also being discussed by companies such as Hyundai Mobis and Sony Honda Mobility’s AFEELA, indicating that this is likely to become a major trend in the future of mobility.

Healthcare: Continuous Sensing and Monitoring Anywhere

In cities, homes, cars… people’s conditions are being sensed across various spaces, and AI provides personalized suggestions. Within this trend, healthcare is also evolving, with AI-powered technologies for monitoring health conditions. A major trend is the ability to easily and continuously sense and monitor one’s health anywhere.

Xandar Kardian introduced a contactless sensor placed beside the bed to monitor health conditions and send alerts to family members if any danger is detected. The device “being u” continuously monitors a person’s voice to detect comfort or discomfort and offers suggestions for a happier lifestyle. In addition, WITHTHINGS presented the concept model OMNIA, a smart mirror that can check the user’s overall health simply by standing in front of it. This year, many devices were showcased that make it easy to continuously monitor people’s health.

What was notable about these products is that they can provide detailed insights into a person’s physical and mental condition with minimal input—just by observing facial expressions or appearance, listening to voice or heartbeats, or using breath, saliva, or urine samples. By learning from big data on human health, AI can deliver important results from even small amounts of input. With these technologies, our health can be continuously monitored, and AI can offer appropriate advice and support.

The Era of AI-Supported Human-Machine Integration Begins

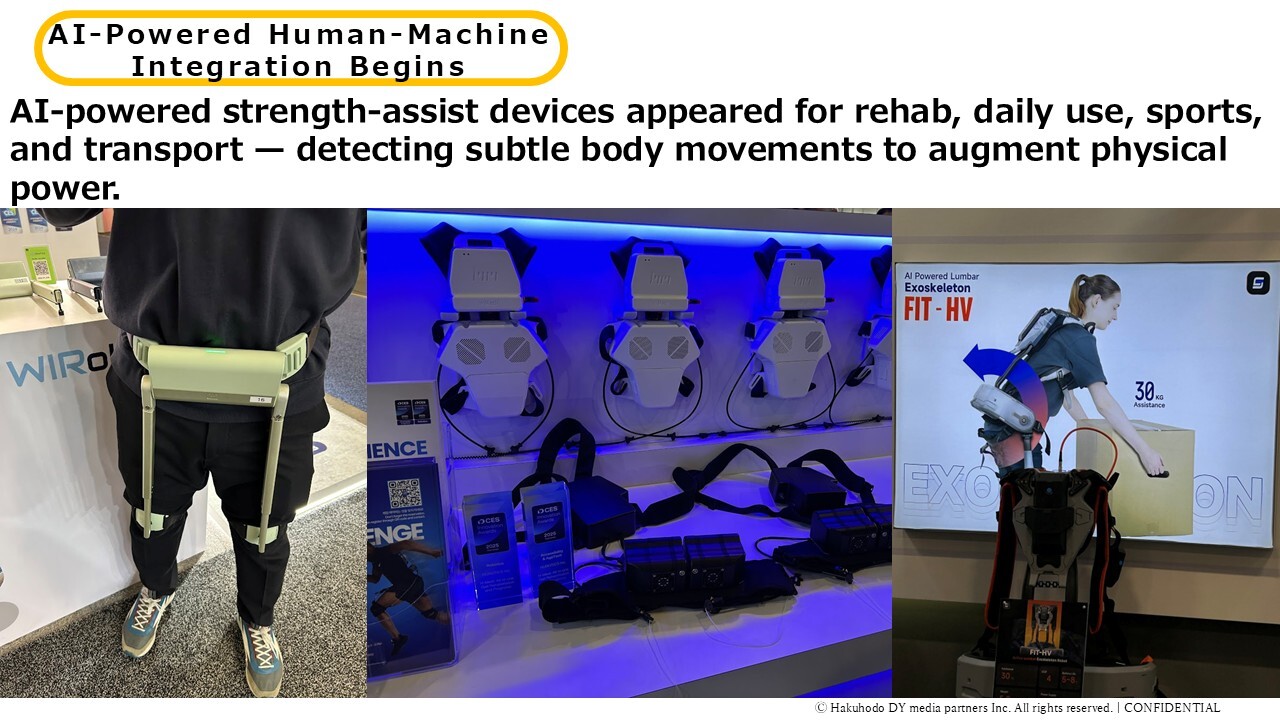

In terms of technologies that support the human body, human-machine integration has also advanced, including devices that directly assist the body itself, such as robotic prosthetics and exoskeletons.

Representative examples include BioLeg, a robotic prosthetic leg supported by algorithms that won this year’s Best of Innovation Award, and HyperShell, an AI-powered exoskeleton designed to enhance walking and running.

These human-machine integrated devices were showcased in many forms—whether for rehabilitation, daily use, sports, or heavy lifting. What they all have in common is that AI detects subtle body movements, allowing the device to support and enhance human motion.

I personally tried one of the walking support devices again this year. Last year, I felt it pulled my thighs rather forcefully, but this time it provided a much smoother and more comfortable walking experience. According to the staff, this improvement came from AI learning human movements and refining the algorithms. This is a clear example of how AI-driven improvements can deliver more natural and comfortable motion enhancement.

From Strengthening Our Digital Lives with AI to Enhancing Our Entire Lives

As we’ve seen so far, cities, homes, people—and even our bodies—are now being sensed in full 360 degrees. Based on this data, AI is beginning to support us across many aspects of daily life, making experiences more comfortable and more empowering.

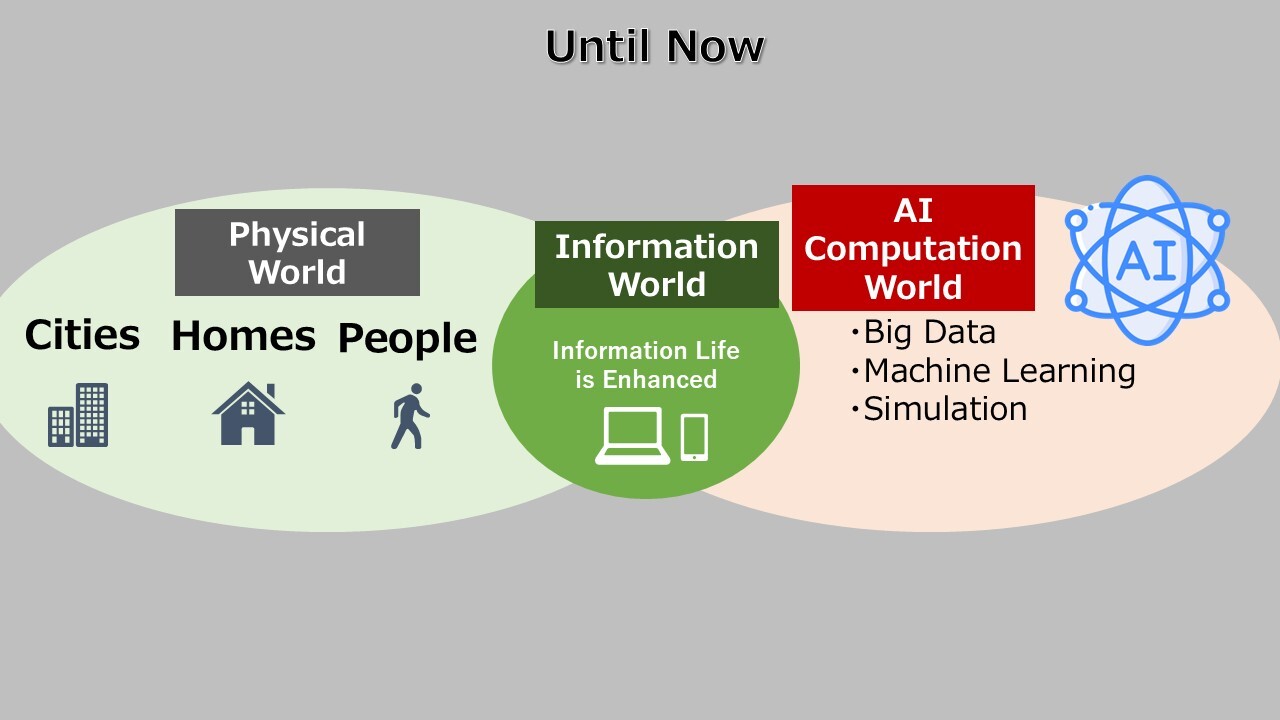

So, what is the real change that lies ahead? It is the shift toward every part of our lives being enhanced by AI—better, more efficient, and more comfortable. Until now, we have been surprised and delighted by the rise of generative AI, but that excitement was mainly about the digital world—how it made information on our smartphones and PCs more accessible, efficient, and enjoyable.

From now on, our physical world—cities, homes, and people—will be sensed in 360 degrees, and that data will be sent into the computational realm of AI. In this realm, the data will be learned and further enhanced through simulations of situations that cannot be tested in the real world. The strengthened data will then be returned to the physical world—our cities, our homes, and ourselves—helping to realize a safer, more convenient, more comfortable, and more efficient life through a variety of devices.

AI and People’s Lives – The Connection Becomes Simple AI Agents

As AI becomes more deeply embedded in our daily lives, how will the point of connection between AI and people evolve? Behind the scenes, AI performs complex computations in real time, but the interface may become much simpler—through AI agents. These agents will interact with users through natural language, offering personalized suggestions and taking actions across different devices. Last year, companies like L’Oréal and Walmart introduced conversational AI for personalized shopping experiences. This year, however, we’re seeing more advanced agents emerging—designed to actively support and enhance people’s everyday activities.

For example, Panasonic introduced “Umi,” an AI coach for families. Umi supports family life by offering advice to help create happiness and even manages scheduling for all family members. Delta Air Lines, on the other hand, presented an AI concierge designed to assist with air travel. Through smart glasses, it provides key information such as departure times and boarding gates. These kinds of AI agents, which stay close to people and support their daily activities, will become increasingly essential in the coming era where AI enhances every aspect of life.

And these AI agents are no longer limited to smartphones or PCs. They will be embedded across a wide range of devices—TVs, cars, refrigerators, washing machines, earphones—allowing people to interact with AI anytime, anywhere, enhancing their daily lives.

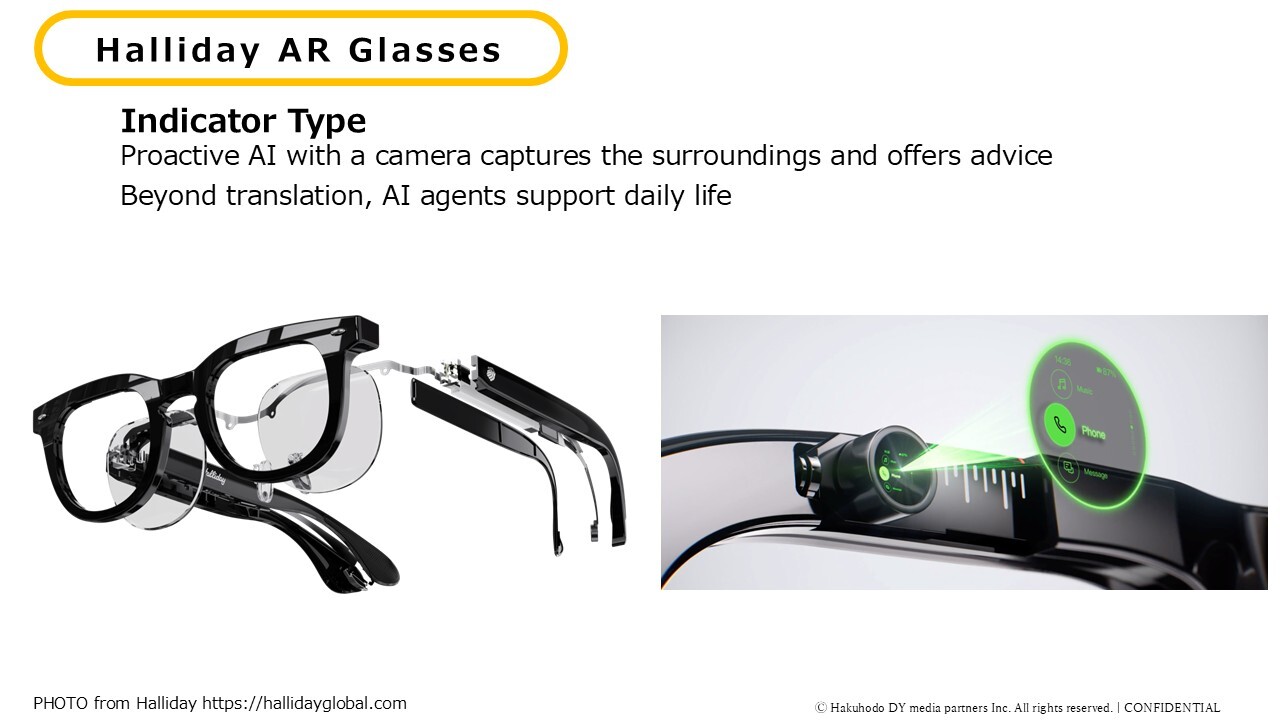

As devices that live alongside AI, smart glasses drew particular attention this year. One highlight was Halliday, a lightweight pair of smart glasses weighing only 35 grams but offering up to 12 hours of use. Halliday comes with proactive AI that listens to surrounding conversations and recognizes situations, then provides timely support based on the user’s needs. In its concept video, for instance, the glasses understood the flow of a long, complex meeting and offered suggestions like “This proposal would be effective,” directly on the display. With multilingual translation also built in, Halliday truly represents an “AI agent device that enhances everyday life.”

Communication Transformed by AI Agents

The rise of AI agents will also bring major changes to how we communicate. With conversations with AI agents emerging as a new touchpoint, companies will need to develop communication strategies tailored for them. Just as search engine management once became essential for being discovered online, businesses will now need to ensure their products and content are recognized and recommended by AI agents.

Furthermore, the way creativity is expressed will also change. Today, advertising can be targeted based on consumer “attributes” such as age, gender, or location. But in the future, sensing technologies will reveal consumers’ “states”—their health, emotions, and overall mood. This raises new questions: How should an AI agent talk about your products or content depending on a person’s state? And if advertising can also be delivered around that interaction, what kinds of expressions can hit the mark—perfectly matching someone’s state—without feeling intrusive or unpleasant? This shift will call for a new kind of creativity we haven’t seen before.

Conclusion

At CES 2025, we were able to see the larger “context” of how AI technology is shaping our daily lives. AI is no longer just a tool for the digital space. It is now blending into our entire living environment, using 360-degree sensing to gather information and, together with various devices and AI agents, making our lives stronger and richer.

Just as generative AI has advanced dramatically in just two years, this kind of future is likely to arrive sooner rather than later. To prepare for such a major shift, companies will need to take various actions: developing AI-powered devices, creating AI agents tailored to their product domains, and producing information that AI agents can recognize. Of course, the outcomes of these efforts are uncertain, and there will naturally be concerns. Yet, to understand what concrete changes may come and how to respond, it is crucial to take even small steps and accumulate experience.

Whether to take that small step or not can make a huge difference in a rapidly changing era. There should be small steps that only Japanese companies, with their strength in understanding and supporting the subtle needs of consumers within an island nation, can take.

Profile of Reporting and Editorial Staff

Special Support